What if we could predict changes to ecosystems in the same way we can predict the weather? An emerging field of science is working towards making that a reality.

In areas such as fisheries, wildlife, algal blooms, wildfire, and human disease, we often need to know how ecosystems and the services they provide might change in the future and how human activities can affect those trajectories. An ecological forecast uses multiple scientific disciplines, such as physics, ecology, and biology, to predict how ecosystems will change in the future in response to environmental drivers such as climate variability, extreme weather conditions, pollution, or habitat change.

Among the recommendations that the Delta Independent Science Board (DISB) is making to the Delta Plan Interagency Implementation Committee (DPIIC) is to include the use of ecological forecasting as a way to better integrate science across agencies and departments.

Among the recommendations that the Delta Independent Science Board (DISB) is making to the Delta Plan Interagency Implementation Committee (DPIIC) is to include the use of ecological forecasting as a way to better integrate science across agencies and departments.

To learn more about this emerging field, the May meeting of the Delta Independent Science Board (DISB) featured a presentation from Dr. Michael Dietze on the emerging field of near-term ecological forecasting. Dr. Dietze is the founder and chair of Ecological Forecasting Initiative, an international grassroots research consortium aimed at fostering a community of practice around near-term ecological forecasting. Dr. Dietze is also a professor of Professor, Department of Earth and Environment at Boston University, and author of the book Ecological Forecasting.

Dr. Dietze began by noting that the title of the presentation was inspired by a fairly USGS report that had the subtitle, ‘21st Century Science for 21st Century Management. “I think ecological forecasting is going to make possible the idea of really embracing what modern technology, modern science, and modern observational capacities allow to think about how we do management differently by leveraging these technologies.”

He also pointed out that reports on ecological forecasting are becoming more common; NOAA, NASA, the North American Carbon Program, and OCTP are incorporating ecological forecasting into their programs.

“This focus is becoming much more common across different agencies, particularly federal agencies, and smaller organizations as well,” he said. “It’s becoming a much more recognized scientific and management frontier.”

WHAT IS A FORECAST?

The paper, Ecological Forecasts: An Emerging Imperative, by Clark et al., in 2001, defined the ecological forecast as ‘the process of predicting the state of ecosystems, ecosystem services and natural capital with fully specified uncertainties.’ Considering uncertainties is very important; uncertainty is what separates forecasting from just running models.

The paper, Ecological Forecasts: An Emerging Imperative, by Clark et al., in 2001, defined the ecological forecast as ‘the process of predicting the state of ecosystems, ecosystem services and natural capital with fully specified uncertainties.’ Considering uncertainties is very important; uncertainty is what separates forecasting from just running models.

“When it comes to decision-making forecasts, uncertainty translates to risk,” said Dr. Dietze. “I am a strong believer that it is better to be honestly uncertain about a forecast than to be falsely overconfident. False overconfidence, which often occurs when you don’t include uncertainties, can lead to poor decision-making. Sometimes when you ignore the uncertainties, you might say, ‘the model says you should do x,’ when the model did not actually say that; it might say, ‘I don’t really know what’s going to happen.’ So you can translate that breadth of uncertainty into your risk management, either informally or through formal decision science approaches.”

There are two types of forecasting:

Predictions are probabilistic statements about what will happen in the future, based on what is known today. An everyday example would be a weather forecast, which takes what is known about the atmosphere right now, and the implications of that for the weather over the next two weeks.

Predictions are probabilistic statements about what will happen in the future, based on what is known today. An everyday example would be a weather forecast, which takes what is known about the atmosphere right now, and the implications of that for the weather over the next two weeks.

Projections are also probabilistic statements, but projections are based on conditional boundary scenarios or decision alternatives. An example would be climate change projections; we don’t have predictions of the climate at 2100; rather, we have projections conditional on different scenarios for different economic activities, human population growth, land use, emissions, and other factors. You can’t average over the scenarios; you can only talk about them. Projections are often used in structured decision-making, where different decision alternatives are being considered.

Predictions tend to be short-term; projections can be short-term or long-term. Forecasting is an umbrella term that includes both of these activities.

WHY FORECAST?

One of the main arguments for forecasting is to improve environmental decision-making. Traditional environmental management was often based on concepts of stationarity and equilibrium or steady-state; however, we are no longer living in a steady-state world. Instead, we live in a time where the environment is changing rapidly and often in unprecedented ways. Change is the new normal, so we should expect things will always be moving to new conditions. Therefore, we can no longer rely on historical flood frequencies or historical species distributions as our management objectives.

One of the main arguments for forecasting is to improve environmental decision-making. Traditional environmental management was often based on concepts of stationarity and equilibrium or steady-state; however, we are no longer living in a steady-state world. Instead, we live in a time where the environment is changing rapidly and often in unprecedented ways. Change is the new normal, so we should expect things will always be moving to new conditions. Therefore, we can no longer rely on historical flood frequencies or historical species distributions as our management objectives.

“So if we can’t rely on stationarity, I think the idea of relying on predictions or forecasts becomes progressively more and more important,” said Dr. Dietze. “When you think about what environmental decision making is fundamentally about, decisions are about what we think is going to happen in the future based on what we know today. Essentially, a forecast is our best scientific understanding of what is likely to happen in the future. It is an explicit way of informing decision-making, and one that I think is becoming more important the less we can rely on historical norms and more we have to rely on models to deal with multiple, simultaneous changes.”

NASA’s carbon monitoring program has been focused on long-term projections out to 2100 under numerous different climate change scenarios; however, Dr. Dietze noted that this has created an unfortunate gap between the work ecologists are doing and the actual needs of most stakeholders and decision-makers, which tend to be on a much shorter timescale.

NASA’s carbon monitoring program has been focused on long-term projections out to 2100 under numerous different climate change scenarios; however, Dr. Dietze noted that this has created an unfortunate gap between the work ecologists are doing and the actual needs of most stakeholders and decision-makers, which tend to be on a much shorter timescale.

“Most decision-makers want seasonal, subseasonal, or annual data,” he said. “Many of them don’t know what to do with the projection out to 2100. They need to know what’s happening now or in the near future. And that’s something that this idea of near-term forecasting is designed to address specifically.”

Additionally, the expanded capability to collect environmental data utilizing advancements in sensor technologies and remote sensing, along with distributed data coming in from community science initiatives, contribute to the potential of making predictions on shorter timescales that are more decision-relevant.

An example of a near-term iterative forecast is a forecast of vegetation phenology. “We literally run this every day, and every day we make projections of vegetation’s status. And then, we update those as new observations come in every day, in this case, using a combination of satellite remote sensing and phenocams, which are essentially just webcams pointed at vegetation. “

An example of a near-term iterative forecast is a forecast of vegetation phenology. “We literally run this every day, and every day we make projections of vegetation’s status. And then, we update those as new observations come in every day, in this case, using a combination of satellite remote sensing and phenocams, which are essentially just webcams pointed at vegetation. “

Dr. Dietze noted that near-term forecasting doesn’t just improve our decision-making; it fundamentally improves our science.

“I’ve spent 20 years making projections to 2100, but I’ve never been to 2100 to see if I’m any good at it,” he said. “By contrast, when I’m making my phenology forecast, I know tomorrow whether the forecast I made today was any good or not. So you get this more continual feedback on an ongoing basis about how you’re doing that lets you update your understanding, update your models, and improve.”

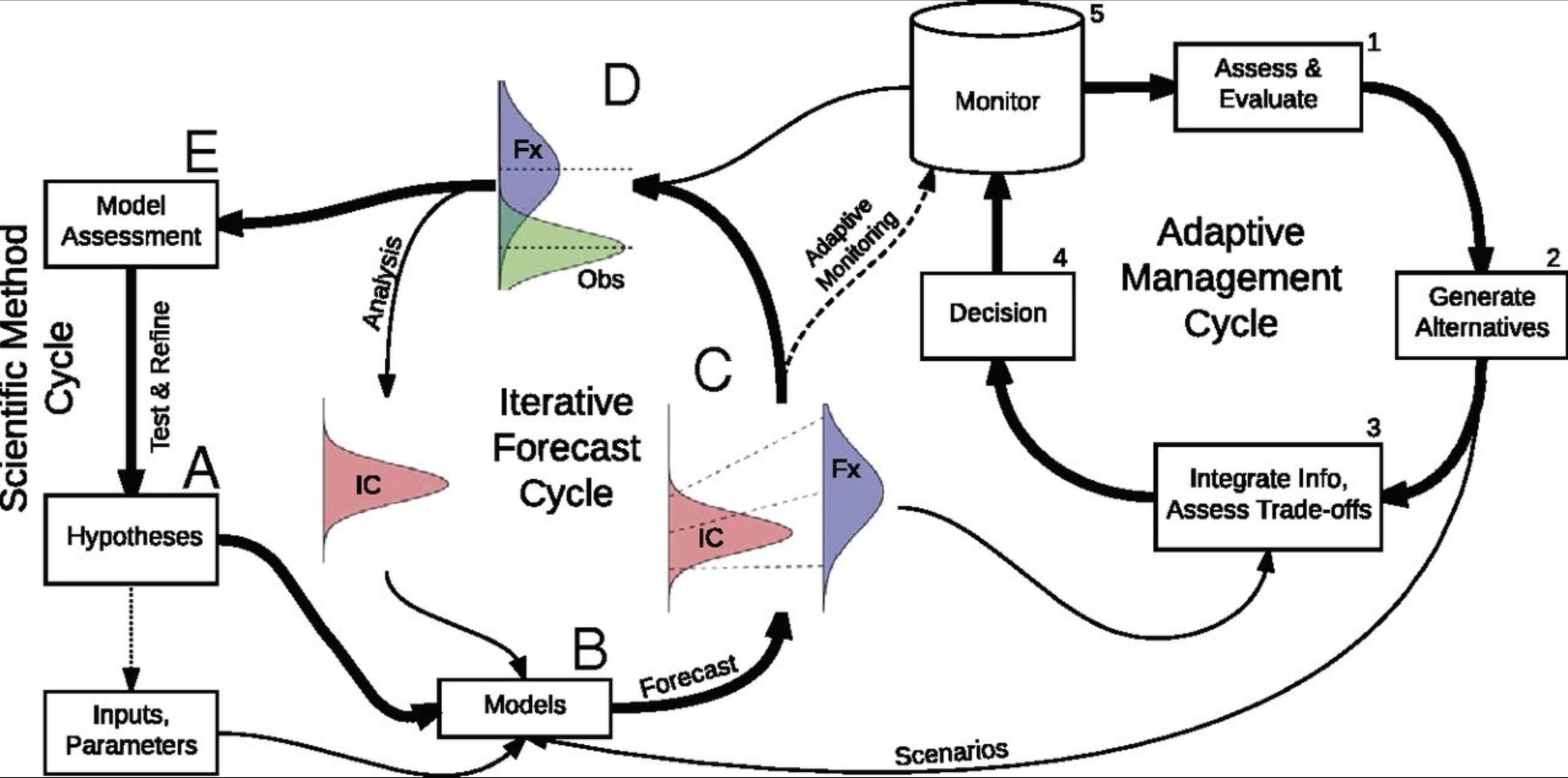

“I argue that that iterative cycling between making forecasts in performing analyses is completely compatible with how we think about how the scientific method works,” he continued. “Our models represent our hypotheses about how the world works. We use them to make predictions. We test those hypotheses against new data as they come in. We update that and we iterate, so we have this potential to use near-term forecasting, not just to improve decision making, but actually to learn quicker and to continually be confronting our hypotheses in our models with data from reality on an ongoing basis.”

“I argue that that iterative cycling between making forecasts in performing analyses is completely compatible with how we think about how the scientific method works,” he continued. “Our models represent our hypotheses about how the world works. We use them to make predictions. We test those hypotheses against new data as they come in. We update that and we iterate, so we have this potential to use near-term forecasting, not just to improve decision making, but actually to learn quicker and to continually be confronting our hypotheses in our models with data from reality on an ongoing basis.”

Forecasts also force us to be quantitative and specific, which forces us to make very falsifiable predictions, and hypothesis tests. Forecasts force you to essentially pre-register what you think will happen and record it in a public place.

“Forecasts force you to be a priori,” said Dr. Dietze. “They force your validation to the out of sample, so you can’t essentially overfit to data that hasn’t been collected yet, which is a way of making what we’re doing more robust.”

Making predictions every day in an iterative forecast cycle is a win-win, he said. Forecasting has the ability to intersect with the scientific learning cycle and the decision-making and adaptive management cycle. We use the alternatives being considered for management to drive model scenarios; those scenarios go into decision-making processes, and the monitoring data feeds into the forecasting system itself. There is also the underexplored possibility of using forecasts to improve the way we do the monitoring, making the monitoring more adaptive in space and time to where that data is most valuable to our forecasts.

It’s important to be honest about the fact that early forecasts will likely be poor, but they will improve over time. The graph shows the accuracy of how the skill of weather forecasting improved over time. The X-axis also notes the advancements in technology over the years.

It’s important to be honest about the fact that early forecasts will likely be poor, but they will improve over time. The graph shows the accuracy of how the skill of weather forecasting improved over time. The X-axis also notes the advancements in technology over the years.

“One thing that’s noticeable about this graph is there are no jumps,” Dr. Dietze said. “We didn’t get better forecasts the second we get better computers. We didn’t get better forecasts the second we get better monitoring data. We have this slow and steady improvement in skill that I think is really reflecting that by confronting our models with reality every single day and learning iteratively, we continually improve our skill.”

“If we just started back in the 50s and said, ‘numerical weather forecasts are not very good, we should stop doing that,’ we wouldn’t have the skill we have today,” he continued. “If we said, ‘we should wait until the models are good enough and then we’ll start doing numerical weather forecasting,’ it would have never gotten good enough. So I’m a strong believer that the only way to get forecasts to be good is to start making forecasts. It’s that intuitive prediction itself that is the way that we better forecast better. So I sometimes get feedback that folks worry that our models aren’t good enough yet to make predictions, and I strongly believe that they will never be good enough to make predictions until we use them to make predictions.”

HOW DO WE FORECAST?

There are two steps to the forecast cycle: the forecast step and the analysis step.

Forecast

The forecast step begins with the initial conditions (IC) and the current uncertainty about the current status of our systems; driver uncertainty is what is projecting us into the future; and the parameters and the uncertainties associated with them being fed into the model and used to make probabilistic predictions.

The forecast step begins with the initial conditions (IC) and the current uncertainty about the current status of our systems; driver uncertainty is what is projecting us into the future; and the parameters and the uncertainties associated with them being fed into the model and used to make probabilistic predictions.

There are various ways to do that; Dr. Deitze has a book that details all the different analytical and numerical methods and what they can be used for.

“I will say that by and by large numerical methods are essentially the norm in ecological forecasts,” he said. “This comic from XKCD does a great job of explaining how these numerical ensemble methods work. We’ve started to see these things sort of every day now when we look at weather forecasts or forecasts of hurricane tracks. There’s a model that’s run with samples of different representations of realities; we’re sampling our weather forecasts when we drive ecological forecasts, we sample our uncertainties about the current status and parameters, and we just run the model 1000s of times, and we get 1000s of predictions.”

The uncertainty in a forecast tends to increase with time, he noted. “That makes sense because if you’re more confident about the future than the present, you’ve probably done something wrong. Then at some point, the uncertainty becomes sufficiently large that we’re not doing any better than chance. This background or null uncertainty defines the limit of usability or usefulness of any forecast in space, time, or whatever dimension you’re forecasting. If we’re lucky, those overlap with a decision relevant timescales.”

“One of the interesting questions is, what controls the rate of growth of the uncertainty because that is essentially what controls how far in the future we can make predictions,” he continued. “If we can understand those uncertainties, we can chip away at them and try to increase the skill of our forecasts and try to increase the useful time-span of these forecasts.”

The slide shows the equation used to analyzing and partitioning the sources of uncertainty that control predictability, such as internal factors, external forcings such as the weather, uncertainty about ability to constrain parameters, random effects such as unexplained heterogeneity and variability, and process errors.

The slide shows the equation used to analyzing and partitioning the sources of uncertainty that control predictability, such as internal factors, external forcings such as the weather, uncertainty about ability to constrain parameters, random effects such as unexplained heterogeneity and variability, and process errors.

The paper, Prediction in ecology: a first-principles framework, from Ecological Applications October 2017, goes into detail about what different types of ecological systems will be more or less predictable, based on the prevalence of different sorts of uncertainties for various types of ecological systems.

“It’s an open question more broadly as we’re still in the early days of learning about which ecological systems are going to be more or less predictable,” said Dr. Dietze. “To me, that’s a really exciting scientific frontier.”

Analysis

Once the forecast is made, it is updated when there is new data.

Once the forecast is made, it is updated when there is new data.

“Now we have a forecast that is probabilistic, we have observations, we have uncertainty about them as well, and we need some way of combining our current forecast with this new observation,” said Dr. Dietze. “What we don’t want to do is just throw out the forecast and use the new observation because there’s uncertainty in the observations as well, so we use that updated state to feedback into the model and update our forecasts.”

“We reconcile the forecasts and observations using statistical analyses. For much of the work that I do, I use a physical theorem called Bayes theorem, which is designed for this iterative updating. It’s designed to say that I have some prior understanding of the world and as new information becomes available, I have a statistical theory that tells me how to combine these two pieces of information.”

The statistical analysis provides a method that weights the information received: if the data is imprecise, the green area is relatively large, and update largely sticks with the forecast but might nudge a bit towards the new data; new noisy data shouldn’t derail forecasts, Dr. Dietze explained. By contrast, if the data is constrained and the model is very uncertain, the system moves much closer to the new data.

The statistical analysis provides a method that weights the information received: if the data is imprecise, the green area is relatively large, and update largely sticks with the forecast but might nudge a bit towards the new data; new noisy data shouldn’t derail forecasts, Dr. Dietze explained. By contrast, if the data is constrained and the model is very uncertain, the system moves much closer to the new data.

“One important thing that we’ve learned from Bayes theorem, though, is that the fusion of the model and the data is always going to be more precise than either alone,” he said. “So the wonderful thing about doing this forecasting is our understanding the system is always going to be better having done it than just relying on pure monitoring data.”

The other powerful thing about these data fusion algorithms that underlie this iterative updating is they’re good at combining multiple pieces of information.

“I have a terrestrial carbon bias, so I think about things like how I can use forecast models as a scaffold for combining information, such as satellite data, detailed physiological data, observational data, experimental data, and monitoring data,” explained Dr. Dietze. “I have different ways of fusing multiple sources of information together within these iterative forecasting frameworks.”

“So if I have a prediction, where two things that I’m predicting covary, such as the carbon flux in the landscape and the amount of conifer vegetation on that landscape, if I make an observation for one of those things, I update that using Bayes theorem, but I also update everything it covaries with, so I can improve my understanding of things I don’t actually observe directly based on the covariance structure within these forecasting models. And it also works both ways; it allows us to fuse information.”

“So if I have a prediction, where two things that I’m predicting covary, such as the carbon flux in the landscape and the amount of conifer vegetation on that landscape, if I make an observation for one of those things, I update that using Bayes theorem, but I also update everything it covaries with, so I can improve my understanding of things I don’t actually observe directly based on the covariance structure within these forecasting models. And it also works both ways; it allows us to fuse information.”

EXAMPLES OF ECOSYSTEM FORECASTS

Next, Dr. Dietze turned to examples of ecosystem forecasts that are being used today. It’s an exciting area, he said, because it touches all parts of the discipline of ecology, Forecasts are being used for everything from algal blooms, coral bleaching, infectious disease, soil health, to disturbances like wildfire, managed populations – all the way to doing forecasts synchronous with experimental manipulations.

“I have a friend at Stony Brook who is literally forecasting penguin populations in Alaska using data from the NASA satellites,” he said. “I don’t think there’s a cooler project in the world than forecasting penguins from space. Folks in the southwest are forecasting drought mortality. It’s really spanning everything and really provides us a way of bringing a community together and seeing commonalities in the predictability of systems. There’s a lot that we can learn about ecology on the fine-scale, but also on the broad scale.”

Some specific examples:

NOAA’s Monterey office has a product called EcoCast, a fisheries bycatch forecast for the West Coast that combines information about the spatial range of different potential bycatch species, such as sharks, sea lions, leatherback turtles, and swordfish, and how they are moving dynamically in space and time.

NOAA’s Monterey office has a product called EcoCast, a fisheries bycatch forecast for the West Coast that combines information about the spatial range of different potential bycatch species, such as sharks, sea lions, leatherback turtles, and swordfish, and how they are moving dynamically in space and time.

“They are sending out alerts to the fishers every day who get updated maps that they’re actually using in practice to make fishing decisions. It’s interesting that there’s no actual federal legislation requiring them to make use of these forecasts yet, but they’ve adopted them because they’re actually useful. They are helping them avoid bycatch and target their target species better.”

There are several harmful algal bloom forecasts that exist around the world, put forward by NOAA and other agencies. The graph on the far right on the slide shows a forecast of risk encounters with Atlantic sturgeon on the east coast.

All of these examples are forecasts that are literally updated every single day.

The Smart and Connected Water Systems is a forecasting system being developed by Virginia Tech. The team has highly instrumented a reservoir that they use to make forecasts through time, starting with water temperature and lake turnover. Forecasting lake turnover is particularly important as it is a big water quality event, so any lead time reservoir managers can get helps them make proactive management decisions, such as ordering supplies or scheduling staff. The Virginia Tech team has been successful in forecasting turnover 1-2 weeks in advance.

The Smart and Connected Water Systems is a forecasting system being developed by Virginia Tech. The team has highly instrumented a reservoir that they use to make forecasts through time, starting with water temperature and lake turnover. Forecasting lake turnover is particularly important as it is a big water quality event, so any lead time reservoir managers can get helps them make proactive management decisions, such as ordering supplies or scheduling staff. The Virginia Tech team has been successful in forecasting turnover 1-2 weeks in advance.

“This example is one where they’ve been working very closely with the reservoir managers from the get-go,” said Dr. Dietze. “The managers get a daily forecast to work with that has the uncertainties, and they’ve worked to design the alerts that that community specifically needs. It’s been so successful that the reservoir managers have turned over operations of the reservoir’s oxygenation system to the research team, who use it to optimize oxygenation levels of the lake, but also to run some experiments about things such as methane forecasts … It turns out there are trade-offs between oxygenation that affects the risk of algal blooms, but then also as trades off with carbon storage capacities. So there’s no one best solution.”

Dr. Dietze’s lab runs forecasts of the soil microbiomes across the US, demonstrating the ability to increase predictability across spatial scales and by genetic levels.

The lab runs forecasts of ticks and tick-borne disease, primarily driven by NEON data. The forecasts are updated as new data becomes available.

The lab runs forecasts of ticks and tick-borne disease, primarily driven by NEON data. The forecasts are updated as new data becomes available.

“This is an interesting case because our forecasts of the actual tick population are very different than forecasts of observations because we have this capture probability problem, as we only ever get to see the ticks we capture,” he said. “This is a very common problem in fisheries as well. You only see a subset of the organisms you’re capturing, and the population itself is unobserved and latent. This graph shows for the larvae, we are actually able to capture orders of magnitude, variability, and shifts as population shifts as well.”

Dr. Dietze’s lab provides carbon and water flux forecasts between the land and atmosphere sent out in a daily email.

Dr. Dietze’s lab provides carbon and water flux forecasts between the land and atmosphere sent out in a daily email.

“It’s a daily forecast of plant water stress and carbon sequestration capacity,” he said. “This is a system that started as a series of site-level forecasts and working with NASA carbon monitoring. We’re in the process of scaling this up to continental carbon forecasts. We’ve got a carbon hindcast running. So we’ve run it historically, and we’re in the process of pushing it to a real-time system.”

RESOURCES

The Ecological Forecasting Initiative (EFI) is an organization established specifically to build a community of practice around ecological forecasting. It is a broadly interdisciplinary community that includes ecologists, social scientists, computational scientists, decision scientists, physical scientists, and environmental scientists. It is also a diverse community in that it includes academics, agency folks, NGOs, and a small but growing number of folks in the industry. EFI is working on developing community-scale cyber structures and computational workflow tools to support ecological forecasts so that not everyone is reinventing the workflows to run these forecasts.

The Ecological Forecasting Initiative (EFI) is an organization established specifically to build a community of practice around ecological forecasting. It is a broadly interdisciplinary community that includes ecologists, social scientists, computational scientists, decision scientists, physical scientists, and environmental scientists. It is also a diverse community in that it includes academics, agency folks, NGOs, and a small but growing number of folks in the industry. EFI is working on developing community-scale cyber structures and computational workflow tools to support ecological forecasts so that not everyone is reinventing the workflows to run these forecasts.

“EFI aims to bring these communities together, and realize that a lot of the problems that we have in making ecological forecasts, even though they are very diverse in their applications, have a lot of common cross-cutting challenges,” Dr. Dietze said. “EFI is organized around the common cross-cutting themes. Some of them are technical, such as methods in cyber infrastructure and decision methods. Some of them are about education and broadening the community, and bringing more capacity building. We have a lot of educational resources up on our website; there are videos, there are course materials, there’s the book I mentioned earlier, and we run a summer course every year.”

“EFI aims to bring these communities together, and realize that a lot of the problems that we have in making ecological forecasts, even though they are very diverse in their applications, have a lot of common cross-cutting challenges,” Dr. Dietze said. “EFI is organized around the common cross-cutting themes. Some of them are technical, such as methods in cyber infrastructure and decision methods. Some of them are about education and broadening the community, and bringing more capacity building. We have a lot of educational resources up on our website; there are videos, there are course materials, there’s the book I mentioned earlier, and we run a summer course every year.”

EFI is currently running an open forecasting challenge using data from the National Ecological Observatory Network (or NEON). Fifty-six teams from around the world are participating in the challenge as a way of building the community and developing algorithms.

EFI is currently running an open forecasting challenge using data from the National Ecological Observatory Network (or NEON). Fifty-six teams from around the world are participating in the challenge as a way of building the community and developing algorithms.

The EFI website has a robust collection of information on ecological forecasting, including technical tools, lessons learned, ethics, research, operations, a blog, a community newsletter, social media, and a GitHub repository.

Q&A

QUESTION: A lot of uncertainties are accumulating … is there any effort to compare your forecast with data and do some data assimilation type things while you are going? Can you give some thoughts on the application of this type of framework to the Delta?

Dr. Dietze: “A lot of us are using these iterative data simulation techniques where you get to compare your forecast to new observations every single day. Often models are built on some calibration data set, but the models are updated continuously as new information becomes available, so they’re constantly learning.

“One of the things that is exciting is the paper I’ve been working on that shows that you can start with an ensemble of multiple models that range from simple linear models to more complex mechanistic models and that as you increase the amount of information you have about any process over time, you iteratively learn not just about the states of your system and the parameters in your models, you learn about what model structures work best. So you forecast with simple models early, but those models can grow in complexity. And we can do that continuously. With traditional model building, you might go out and measure something for five years, and then you update your model. So I think the exciting thing here is the ability to do this continuously.

“In terms of the Delta itself, I’ll admit that I am not a wetland or coastal or river ecologist by training; since we’ve launched EFI, I’ve started working much more with aquatic ecologists than I ever did before. But there are lots of different places to think about this. For example, on the population side, are there managed species that are important to you? You have fishery species threatened or endangered species, and folks are doing ecological forecasts for invasive species, as well. And then there’s a lot of folks doing forecasts on the ecosystem and biogeochemical side. So they are forecasting not just water flow, but the nutrients in the water, the flows coming in, and turnovers within systems.”

“I think that some of the most successful forecasts are the ones where there is some form of continual data coming in, though, things that could be better by sensors are low hanging fruit, but also things where there are monitoring campaigns in place so that you have that data to continually check against on populations and other things. “

QUESTION: I’m curious about how you’ve enabled this great progress. I happen to be aware that NEON was a little slow out of the starting gate. So the question is, did you have to get a sort of a certain amount of data that enabled this network? What really enabled this network?

Dr. Dietze: “EFI is a network; the network itself doesn’t produce the forecast. It’s more that we brought a community together that allowed folks thinking about these things by themselves to realize that there is a pretty big community of folks thinking about it now. There are almost 400 people on that slack now; the community is able to share information about what’s going on. We have a dozen active working groups tackling the challenges that the community shares. And new chapters are popping up. We launched a Canadian chapter a couple of years ago, and we’re in the process of launching an Australian chapter.

“So for us, it’s about building communities and bringing people under the umbrella and helping them realize that we were all working in on the same problems that we weren’t often aware of, because of silos, because someone developing great techniques for forecasting the next emergent of epidemic disease has the same mathematical problem as the person forecasting the next invasive species, and they didn’t know that they were working on essentially the same problem. So I think it’s that building a community and sharing strength that’s been really valuable. I think that’s why we’ve seen kind of a sprint of activity over the last few years relative to what came before it. I think the data revolution in ecology is also really fueling this, the fact that we have better monitoring data than we’ve ever had before.”

QUESTION: I was particularly interested in backcasting; you briefly mentioned the word hindcasting, but I found that really useful to test hypotheses, and particularly where events are rare in time or space, which is not what you’re talking about, but what can be important in the Delta. Can you talk about that?

Dr. Dietze: “I think hind testing and backtesting have been a valuable part, and the modeling communities have used for a very long time. I think some of the things that we’re adding that is new is, for example, in our carbon monitoring hindcasting, we’re doing this as a formal reanalysis where we’re not just running a model, and then seeing what happened; we were running a model in this iterative updating mode, in order to come up with what the best estimates of the state of the system were in the past. That helps us with closing budgets, combining multiple pieces of information, and then gives us a composite data product that helps us understand the variability in space and time.

“Also, when we think about projections, conditional on decision alternatives or scenarios, projections are what folks in the forecasting community use to deal with rare events. Sometimes these are called wargames. So when you have events that are sufficiently rare, that you can’t ascribe the hard probability to them, that you can include them dynamically in the forecast, you can still run them as scenarios in that mode. And so, a lot of forecasting for low probability events are done in that mode. And in that case, here, it’s widely acknowledged that one of the key limits for those low probability events have always been failures of imagination. So the forecasts fail when events happen that we didn’t anticipate and didn’t include in those sets of scenarios. Because it’s very hard to include them in models as things we’re predicting dynamically if they don’t happen, we’ve never seen them before, or they happen very, very rarely.”

DR. JAY LUND COMMENT: “What I really enjoyed about your presentation was the discussion about how you need to start modeling before you have a model that works. And how over the course of your model not working, you can make it work better. I think we’re saddled in this system in many places with a more traditional ecologist view of these matters where, after we’ve collected 40 years worth of data, and hypothesis testing, maybe we will know enough that we can start to build a model, rather than what I think of as an engineering view where you start off building a model, and then you know what data to collect. And you use the data that you collect to improve the model. And I appreciate that. I think that’s a major lesson for us here.”

Dr. Dietze: “We have done the sorts of activities that you kind of alluded to there, where you can actually mathematically say, given the model structure I have and its current uncertainties, what is the most next most valuable pieces of information? You can easily do these uncertainty analyses to say, of these five dominant uncertainties, which one dominates any particular forecasts and within that, what are the processes and parameters, and then you can design field campaigns. We have a couple of examples in my library where we’ve done these very targeted field campaigns driven by detailed analyses of forecast uncertainty. And it’s really effective at reducing those uncertainties.”

DR. JAY LUND: “There are lots of wonderful modern techniques to help you do that. Data is really much more expensive than modeling these days. And so, if you can use the modeling to help you with the monitoring and the data collection, I think you can accelerate your understanding much faster and better.”

QUESTION: My question is related to the whole adaptive management piece of this. And I’m curious, just to hear your thoughts on what you think some of the biggest challenges are for integrating ecological forecasting and decision making? Have you really thought about some of the areas where it is more challenging, and is there a role for coproduction of these types of models with decision-makers?

Dr. Dietze: “EFI is a huge, huge advocate of the idea of coproduction. It’s one of the places where there’s complete consensus in the folks in our community that you need to work with the decision maker from as early as possible in developing forecast to make sure you’re forecasting the thing they need. And to get feedback, even on little things like that, how you design your alerts when you send them out to them. Can it affect the way that they interpret them? I would say it beyond that, there needs to be more social decision scientists. We’ve got a few. They’ve been great and really helped us a lot.”

“One of the things that I notice is I encounter a lot of problems where the hard part about including the management components into the forecasts is actually getting good data on what’s happened in the past under different management options, or essentially having the training data on what happens under different management scenarios. That can be challenging, in some sense, because it isn’t big data right now. The management decisions people make, I can see it implicitly in the effects and satellite data and stuff like that. But I don’t have prescribed what people were planning on doing. But when you dive into the gory details, in some ways, it’s a perfect fusion … if you go back and look at some of the original adaptive management literature, the idea of forecasting was kind of implicit in there, the idea that you make decisions that were contingent on learning new things. Then we would update our understanding, and the forecasts are giving us that formal mechanism of updating our understanding and making projections under those different decision alternatives. So I think it’s a perfect fusion.”

There is lots of decision science literature on how you make decisions under uncertainty and how you can firmly combine these predictive models with user preferences and utility functions to account for the risk associated with the decision. I think it’s actually been really informative to the scientists, because a lot of scientists I know think that there’s like a fixed amount of uncertainty you need to get down to for your forecast to be useful. More often, what you find is that your forecast just needs to be better than what is currently out there to provide useful information for making a decision. And sometimes, that’s just doing better than chance; it comes back to that idea of when are our models good enough. And also, even beyond that, the forecast needs to be good enough that you can make good decisions, that your decision outcomes are not highly sensitive to the forecast model. And that doesn’t mean you need to reduce uncertainties to zero; often, you can design and have robust decision choices that will work, despite the fact that there is uncertainty in the forecast.

QUESTION: In the water resources engineering field, the phrase is now, ‘Stationarity: it’s not just dead, it’s a zombie.’ We have to deal with the infrastructure built more than 50 years ago, all these aging dams. We have water rights that are huge constraints. Can you talk about how this approach can help us know what to do first? How do we optimize taking out this dam versus forcing some change in how water rates are allocated?

Dr. Dietze: “That’s a hard question. In the abstract, I could say that if we have models that speak to some of these things, you can run them in a forecasting mode under these different decision alternatives to start looking at possible outcomes. We can also use the uncertainties of this iterative way to help you understand what information is missing and what will be most useful for further constraining the uncertainties down to the level that affects the decisions. I think there’s an untapped potential for adaptive monitoring to be able to use the fusion of the forecasts and the monitoring data simultaneously to gather the information where it’s most valuable.”

“One thought example I often use is if I have an ensemble of multiple models making predictions. When they offer the same thing, collecting data at that point in time doesn’t actually tell me much. But the second that all those different ensemble members make different predictions, that’s when new information would actually tell me something about my competing hypotheses. So you could take the measurements where they’re most valuable. The example, with the Fallen Creek Reservoir smart forecasts, they could do an intense bout of data collection at the times that were most critical to the management relevant events for their system because the forecast enabled them to do that. They could anticipate when those events were going to come and ended up with a much more informative dataset than if they just said, Oh, I’m going to use this legacy monitoring program that says, I go out and take a sample every two weeks. You can easily miss the important events using the kind of static monitoring approaches.”

“In the West, where the streamflow is dictated by snowmelt, a lot of monitoring programs already take that into account when tracking when the snow melts and intensifying sampling during snowmelt.”

DR. STEVE BRANDT: I understand why you’re jazzed about short-term forecasts and how successful in apparently a short period of time you all have been. But the state of California is facing a really big decision about infrastructure to move water around in different ways that will take decades to work in. So there is still a role for longer-term projections.

Dr. Dietze: “I never mean to imply that we need to stop making long-term projections. It’s as much as I think that we need a balancing of the portfolio. The portfolio has been very biased towards long-term projections. For example, I’ve been part of the earth system modeling community working with global carbon budget work for most of my career; we made plenty of projections to 2100. And, the problem is, I’ve never been to 2100 to see if I’m any good at it. But, on the other hand, I think the balanced portfolio gives us a way of iteratively refining the models that we use to make long-term projections. So while short-term forecast doesn’t guarantee that our long-term forecasts are going to get better, I find it encouraging that when we get better at making short-term forecasts than those models now understand the processes better for making long-term projections as well.

The thing that worries me about the long-term projections is there’s no feedback mechanism to see if we’re getting any better at it. So I don’t know the projections I made to 2100 that I made this year are any better than the ones I made 20 years ago.“

-

- Ecological Forecasting, book by Dr. Michael C. Dietze: An authoritative and accessible introduction to the concepts and tools needed to make ecology a more predictive science

- Journal article: Ecological forecasts: An emerging imperative: Planning and decision-making can be improved by access to reliable forecasts of ecosystem state, ecosystem services, and natural capital. Availability of new data sets, together with progress in computation and statistics, will increase our ability to forecast ecosystem change.

- Journal article: Prediction in ecology: a first-principles framework: Paper provides a general quantitative framework for analyzing and partitioning the sources of uncertainty that control predictability. The framework is used to make a number of novel predictions and reframe approaches to experimental design, model selection, and hypothesis testing.

- Bayes’ Theorem: a simple mathematical formula used for calculating conditional probabilities.

- Ecological Forecasting Initiative: The Ecological Forecasting Initiative is a grassroots consortium aimed at building and supporting an interdisciplinary community of practice around near-term (daily to decadal) ecological forecasts.