Ecosystem services are the many and varied benefits that humans gain from the natural environment – things such as food, water, wood, fish, and wildlife. While the concept of ecosystem services has been around for decades, it was the Millennium Ecosystem Assessment in the early 2000s that popularized the concept. The Assessment grouped ecosystem services four broad categories: provisioning (such as the production of food and water); regulating (such as the control of climate and disease), supporting (such as nutrient cycles and oxygen production), and cultural (such as spiritual and recreational benefits).

Dr. Ben Bryant is a multidisciplinary scholar-practitioner working to integrate academic findings into practicable on the ground policy and planning efforts. He has a background in environmental and economic modeling for decision support with a focus on water management, ecosystem services, and decision making under uncertainty. He is currently a post-doctoral scholar based at the Natural Capital Project and Water in the West, two applied environmental research groups at Stanford University.

In this third in a series of three seminars hosted by the Delta Science Program on ecosystem services and the Delta, Dr. Bryant first discussed the modeling suite of process-based ecosystem service models available at the Natural Capital Project, and then focused on the idea of spatial targeting for investments in local ecosystem services under uncertainty, and how that can apply to the Central Valley and the Delta.

In this third in a series of three seminars hosted by the Delta Science Program on ecosystem services and the Delta, Dr. Bryant first discussed the modeling suite of process-based ecosystem service models available at the Natural Capital Project, and then focused on the idea of spatial targeting for investments in local ecosystem services under uncertainty, and how that can apply to the Central Valley and the Delta.

The two groups Dr. Bryant works with at Stanford are very decision-oriented groups working to connect academia and policy or decision making world. The Natural Capital Project focuses on ecosystem services exclusively while Water in the West is more water oriented; one connecting point is hydrologic ecosystem services, which is the forest management connection to water resources, agriculture, or the impacts on sediment or water yield.

Part I: Overview of process-based ecosystem service modeling

There are a number of different ways that ecosystem services modeling can be done; at the National Capital Project, they utilize a production function approach to ecosystem services because it has implications for how things are operationalized in real life.

There are a number of different ways that ecosystem services modeling can be done; at the National Capital Project, they utilize a production function approach to ecosystem services because it has implications for how things are operationalized in real life.

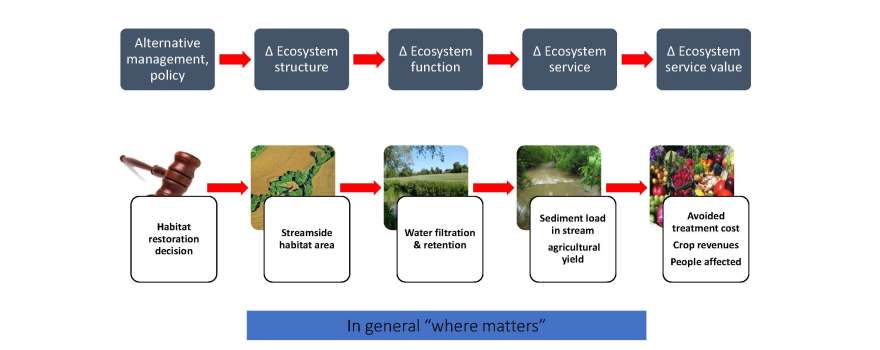

“What we’re talking about is this idea that we’re interested in changes on the landscape and we want to understand how those filter through all the way down to impact on people in terms that people care about,” Dr. Bryant explained. He gave the example of restoring riparian habitat in an agricultural zone and how that might translate into changes in ecosystem services. Whereas before there wasn’t a riparian buffer, now there is and that change in structure leads to a change in how the ecosystem services are functioning, such as changing the process of water filtration from the agricultural field to the stream or helping promote soil retention. That, in turn, translates to things that people actually care about.

“People, whether they are recreational users or water utilities, might care about sediment load in a stream, and if you’re the farmer who was working that land, you might care about the soil that’s retained and you might gain a benefit from having better soil retention on your land,” said Dr. Bryant. “Then so you have this obviously a direct cost where you have an area that was in production before that is now no longer in production, so there’s an impact in both directions there.”

The last step is translating those outcomes that people generally care about into metrics that resonate with them; this is often monetary, but not always, he said. “One example might be the change in sediment loads in the stream or concentration would affect the treatment costs that a water utility needs to pay in order to deliver water of adequate clarity to its customers, or the soil retention trade-off with cropping areas that affects crop revenue for farmers; those are monetary metrics. You might also have a broader benefit-relevant indicator for the number of people affected by stream clarity because if you have a lot of people going to visit a park with a river, they might prefer that the water is clean looking.”

When it comes to this process and with ecosystem structure, the ‘where’ is important for the activity. “If we had put this vegetation in the middle of the field, the impact on all the stuff downstream would obviously be different and probably less,” he said. “Similarly, where matters in terms of the beneficiaries or where people are, so undertaking restoration or enhancing the environment will have a bigger impact if it’s done in a place where lots of people are going to experience it.”

One of the goals of the process is that it can be accomplished within a reasonable timeframe so it can feed into the decision process, and part of that entails translating the science of describing those relationships into software that can be used easier than building something custom every single time.

Dr. Bryant than gave the example of water users in a watershed where the downstream users recognize that the actions and stewardship of upstream users affects characteristics of water flows and water quality that they care about; they also care about a high enough base flow to get through the dry season. So question arises what should be done in the upper landscape to promote that outcome?

Dr. Bryant than gave the example of water users in a watershed where the downstream users recognize that the actions and stewardship of upstream users affects characteristics of water flows and water quality that they care about; they also care about a high enough base flow to get through the dry season. So question arises what should be done in the upper landscape to promote that outcome?

Their hydrologist worked with some collaborators to develop a model that answered the question, where in space is more or less contributing to base flow? That was translated into code by their software team and compiled into an executable package in Windows. The model has numerous inputs, many of them are spatial such as digital elevation maps and land use maps; there are some hydrologic parameters as well. The model essentially takes the inputs, feeds them through the equations as described in the paper, and produces a map of where things are happening on the landscape. In the map on the slide above, the darker areas are those that are contributing more to dry season base flow relative to areas that are lighter colored, so, for example, from a decision standpoint you might want to prioritize those areas not being converted to impervious landscapes, he said.

They have taken that approach for a lot of different models at varying levels of rigor and sophistication, so some models have gone through the process of peer-review and software implementation, and others are closer to look-up tables. “In general, it’s kind of an ongoing demand-driven iteration process there where models that have weaknesses that are problems for users get more attention based on the use cases and engagement that we’re doing,” he said.

Dr. Bryant said they are most associated with the InVEST suite of models, but they have also developed other helpful tools in response to common analytic tasks that people have.

Where things are at now, there is a cascade of models and a Python API that power users can use to interact with models and build interfaces or custom workflows around them. There is a user-interface that is a single run, set it and run, look at output. Some of the models need a lot of updates.

Where things are at now, there is a cascade of models and a Python API that power users can use to interact with models and build interfaces or custom workflows around them. There is a user-interface that is a single run, set it and run, look at output. Some of the models need a lot of updates.

Where they are hoping to move in the future is to make the things that are not science and syncing a lot easier and automated, where the users would be able to run models faster and/or run them off of their computer and get the results faster.

“A big part of this is curating a lot of the commonly needed spatial data, which our own analysts and end users often complain about the amount of effort that’s required to identify all the parameters, and maps and things that are necessary to run the models,” he said. “The trade-off analysis and spatial optimization and things like that essentially involve running models lots of times and processing the results in a way that’s useful for your decision at hand, and so we’re trying to make that easier as well.”

There are others working on developing ecosystem services models; many of them tend to focus on one particular set of needs, such as ecohydrologic models or carbon sequestration. Dr. Bryant encouraged people to look around of models that might meet their needs; they will vary in terms of data requirements or the complexity of the processes represented.

In order to have this information utilized in decision making, Dr. Bryant said that they are working on the science and translating that science into a process and tools that people can use. An important aspect is building success and sharing that success, so by doing all of these, we are essentially both motivating and reducing the barriers for people to utilize natural capital information in their decisions. He also pointed out that they have also found it to be effective to have iterative engagement and co production or codevelopment which is effective and important when trying to actually get the science taken up and utilized.

In order to have this information utilized in decision making, Dr. Bryant said that they are working on the science and translating that science into a process and tools that people can use. An important aspect is building success and sharing that success, so by doing all of these, we are essentially both motivating and reducing the barriers for people to utilize natural capital information in their decisions. He also pointed out that they have also found it to be effective to have iterative engagement and co production or codevelopment which is effective and important when trying to actually get the science taken up and utilized.

As an example, they are working with a couple of different groups looking at the ecosystem service impacts of forest restoration in the Sierra Nevada. Fires have been suppressed for a long time, and there are benefits to restoring them to a more fire-resilient state, so one question is, what are all the different ecosystem service impacts that could come from that? Depending upon who you are trying to convince to care or take action or pay, they may all have different metrics.

As an example, they are working with a couple of different groups looking at the ecosystem service impacts of forest restoration in the Sierra Nevada. Fires have been suppressed for a long time, and there are benefits to restoring them to a more fire-resilient state, so one question is, what are all the different ecosystem service impacts that could come from that? Depending upon who you are trying to convince to care or take action or pay, they may all have different metrics.

“The point is that every stakeholder may have different things that they care about, so the more you can plan to address things to each stakeholder, the more likely you are to get things taken up,” Dr. Bryant said.

Question: Who is the target customer for these tools?

Answer: “First, they are all free so I’m not trying to sell them,” Dr. Bryant said. “In terms of target audience, there’s the tool user and then there’s the audience for the analysis. We’ve worked with Unilever Corporation to help figure out environmental impacts of their sourcing decisions, and they are trying to bring those tools into their own lifecycle assessment workflow. A lot of work is with conservation organizations wanting to not just preserve habitat but also think about how to generate other ecosystem services as well. Development planning and spatial planning exercises. In terms of the audience, it’s essentially all decisionmakers whose decisions are affected by and/or affects spatial outcomes basically. It’s essentially government, NGOs, and the private sector.”

PART 2: Spatial targeting under uncertainty -with applications to Central Valley

Dr. Bryant then turned to the question of how the different types of ecosystem service model approaches can fit that into a spatial planning context.

The landscape structure is providing benefits to people, but maybe it’s in the process of being degraded or maybe it’s being subject to external forces, and we want to know where on the landscape should we target changes that we can control in order to achieve multiple objectives. Those changes could be things like changing irrigation practice, changing the crops, or restoring degraded land; they can also be very specific such as meeting minimum instream flow requirements or broad such as improving agricultural livelihoods.

The landscape structure is providing benefits to people, but maybe it’s in the process of being degraded or maybe it’s being subject to external forces, and we want to know where on the landscape should we target changes that we can control in order to achieve multiple objectives. Those changes could be things like changing irrigation practice, changing the crops, or restoring degraded land; they can also be very specific such as meeting minimum instream flow requirements or broad such as improving agricultural livelihoods.

Dr. Bryant said it’s important to consider that our ability to characterize systems and predict the impact of decisions is limited by the large number of uncertainties. “In general, we often have a lot of disagreement and inability to perfectly characterize even the starting state that we’re working in,” he said. “Land use cover maps are very important inputs to our models and given that there’s uncertainty and disagreement in those, so it’s important to see if that actually matters.”

We’re also not generally very good at predicting drivers in the future, he noted, presenting an example of forecasts for water demand in Seattle, noting that each line is a different forecast from different points in time.

We’re also not generally very good at predicting drivers in the future, he noted, presenting an example of forecasts for water demand in Seattle, noting that each line is a different forecast from different points in time.

“You can see how good they are,” he said. “And you can imagine that planning for those forecasts would lead to significant error and costly policies.”

We also have an imperfect ability to describe how the system will evolve, which is a function both of parameters that go into models and also the structural form of those models, he said.

“A lot of numbers go into models that represent physical processes and suspiciously, many of them are the same exact number when we know that would not really be the case in real life,” he said. “You also don’t know your physical system. For example, you might have a groundwater system which in general we are not able to characterize very accurately, so we don’t necessarily know perfectly how actions on the surface will percolate down into sustainability system considerations and flows within the aquifer.”

Dr. Bryant also pointed out that we have imperfect knowledge of what people actually care about. We have to know generally the types of objectives people have in mind, such as recreation, water quality, species preservation, or agriculture, but our way of engaging and measuring these preferences is always imperfect. Economists tend to focus on market behavior to reveal preferences, but it can also come from voting and ballot measures or even focus groups. But each of these have gaps in information in characterizing how people are willing to trade-off different objectives and what’s actually important, he said.

“To specifically connect this to California, I think we would agree that with most people, especially in the Central Valley, there’s an interest in agricultural livelihoods, having clean water, not polluting groundwater, and no mining waste in their streams. There are also a lot of uncertainties about things such as demand for solar or whether almonds are going to continue to be very important or not, climate change affecting how agriculture works, and earthquakes. Then there’s things that we can do with the land management, such as land use planning, conservation space, including adjusting cropping patterns, changing land use in terms of zoning, targeting restoration, the parameters of groundwater sustainability plans that are developed through SGMA, and state infrastructure decisions.”

“To specifically connect this to California, I think we would agree that with most people, especially in the Central Valley, there’s an interest in agricultural livelihoods, having clean water, not polluting groundwater, and no mining waste in their streams. There are also a lot of uncertainties about things such as demand for solar or whether almonds are going to continue to be very important or not, climate change affecting how agriculture works, and earthquakes. Then there’s things that we can do with the land management, such as land use planning, conservation space, including adjusting cropping patterns, changing land use in terms of zoning, targeting restoration, the parameters of groundwater sustainability plans that are developed through SGMA, and state infrastructure decisions.”

Even non-spatial actions generally have spatial consequences, he pointed out. “If we’re talking about land use, the water price or carbon price translates into different spatially varying suitabilities for different types of actions on the landscape. Often, things are spatial even when you’re not thinking in those terms.”

In the Central Valley, the agricultural economy is under potential threat from the climate and water availability, and several programs that recognize ecosystem services as something to be managed for or incentivized. So what land management decisions can best promote an economically prosperous, climate resilient, ecologically rich Central Valley?

“In connecting this uncertainty angle with decision framing, the general approach that I advocate is that we do need to use models because they contain a lot of information,” Dr. Bryant said. “But we also need to be cautious and humble about putting weight on any particular model run or even any model or set of models, given all of those uncertainties because any single run essentially captures one particular set of assumptions about the starting state, how things evolve and what external forces might act on that system.”

“So the high level idea is that you have this workflow where you have system models that link management options and uncertainties into outcome metrics that you care about, but you want to explore that extremely thoroughly to build insight about relationships, rather than trying to pick the best answer or trust the model,” he said.

So they generate maps that recommend where to do what on the landscape, and the map will vary depending on the things that are of the highest priority. He presented an example if two maps and a trade-off curve where each dot represents a landscape. “If you care most about water yield, then in this case, it’s saying do terracing in (this is in Africa) these places where you see pink, but if you care most about sediment retention, maybe you should focus on mitigating a bunch of dirt roads they have, shown in blue.”

“So the point is that each priority combination that you pick in terms of your objectives leads to a potentially different landscape that you would want to implement. Then you can do that and explore the implications, and you can say, maybe across all of my potential preference combinations, what should I be doing no matter what.”

One way to tie everything together is called the XLRM framework, which looks at the uncertainties, policy levers, relationships, and metrics. “The idea is that the exercise of actually just tracking them in the first place can be helpful in elimination, and it’s also good to revisit your analysis, even if you aren’t quantitatively able to speak to all of these issues, being able to go back and forth between your qualitative assessment and your quantitative modeling can be very helpful,” Dr. Bryant said.

One way to tie everything together is called the XLRM framework, which looks at the uncertainties, policy levers, relationships, and metrics. “The idea is that the exercise of actually just tracking them in the first place can be helpful in elimination, and it’s also good to revisit your analysis, even if you aren’t quantitatively able to speak to all of these issues, being able to go back and forth between your qualitative assessment and your quantitative modeling can be very helpful,” Dr. Bryant said.

Another challenge when building a system to translate to actions on the landscape into outcomes is being able to know where to draw the boundaries of the system. With water, everything is connected, so having a manageable area for analysis is important.

He presented a flow chart for iterating between participatory and computer-assisted policy development from that illustrated a methodology for decision making under deep uncertainty.

He presented a flow chart for iterating between participatory and computer-assisted policy development from that illustrated a methodology for decision making under deep uncertainty.

“One of the things we can do is generate ‘frontiers’ or tradeoff curves for different objectives that we care about and we can do that in many states of the world,” Dr. Bryant explained. “You can use economic valuation or other participatory or multi-criteria processes to filter down into relevant potential solutions, and you can take those and test them against other future states of the world and see how well they do. For example, a land use policy that you develop that assumes we’ve augmented water supply and you have highly reliable surface water will probably not do very well in a world where climate change is more severe than anticipated, and we did not build more storage, for example. So you can test failure scenarios and try to augment your decisions in a way that will make them more robust to different states of the world. Of course it’s always required that you monitor and revisit things.”

Dr. Bryant then showed an example of a project where they ran multiple ecosystem models with numerous different parameters and combinations.

Dr. Bryant then showed an example of a project where they ran multiple ecosystem models with numerous different parameters and combinations.

“Every single dot is a landscape, and these are for three different services: one is water yield, one is sediment retention, and one is soil loss, which are closely related but they are affected in space because you can lose soil without it going into a stream potentially. There are sliderbars to help users dynamically explore what landscape changes are made depending on the assumptions used and different parameter numbers or scenarios to explore the policy performance.”

He noted that right now, it’s showing everything, but if your priority is water yield, the tool can show you the things you should do if you are most interested in high water yield; or similarly, if your priority is sediment, it can show you things you should do if you care mostly about sediment.

“The idea is that you can use this type of display in sort of a participatory process where you can think through what these different scenarios actually look like in the real world and overlay the landcover and other aspects of the economy, and work with stakeholders to do a dynamic envisioning of the future and what it means for the different objectives that they care about.”

Dr. Bryant noted that when people hear about ecosystem services, they tend to ‘leap’ to the idea of monetary valuation, and while there are some for whom that is a main purpose, there’s also numerous papers talking about why that is not a good idea. “I like to think about it as all of this analysis is in the interest of supporting decisions, which is about helping a set people choose between future states of the world, or at least deciding on efforts to move things forward future states of the world that they prefer over others,” he said. “When you look at it in that abstract sense, it helps you think about valuation versus participatory processes and recognize that different decision processes and criteria give more or less representation or weight to different people whether they are included at all.”

“Also some people know what they value, and some people learn their values through the process of engaging through analyses like these or stakeholder processes, and deliberating with people,” he continued. “So all of these different methods, whether its valuation or deliberative multi-criteria assessment, or just voting, they have different implications for how people are represented and how their assumptions evolve.”

He also noted that there is an implicit aggregation problem both across people as an individual needs to decide how they want to trade-off and aggregate their preferences across different outcomes, and also how people will come together with different preferences for different combinations of ecosystem services and filter into one chosen world.

IN SUMMATION …

- Process-based ecosystem service modeling is feasible to incorporate into decision making, but it needs balance and iteration to harmonize analytic capability of the people involved, data availability, and engagement of decision makers.

- Linking decision impacts to multiple outcomes can help stakeholders explore trade-offs, with monetary valuation, or not.

- Uncertainty is everywhere in ecosystem services assessment, but if you’re diligent about acknowledging at planning for it from the beginning, then you can handle it and consider it’s implications in a decision relevant way.

FOR MORE INFORMATION …

- Click here for more information on Stanford’s Natural Capital Project.

- Click here for more information on the InVEST suite of models.

- Click here for more information on Stanford’s Water in the West Program.

- Click here for more on the Millennium Ecosystem Assessment.

PREVIOUS ARTICLES IN THIS SERIES …

- Part 1: BROWN BAG SEMINAR: Towards multi-functional resilient landscapes: environmental heterogeneity as a bridge among diverse ecosystem services

- Part 2: BROWN BAG SEMINAR: Ecosystem services, conservation, and the Delta

Sign up for daily email service and you’ll never miss a post!

Sign up for daily email service and you’ll never miss a post!

Sign up for daily emails and get all the Notebook’s aggregated and original water news content delivered to your email box by 9AM. Breaking news alerts, too. Sign me up!